A Scalable 3-Stage Approach to Designing New Software

Overthinking the scalability of your system design is a common problem during new software development. Your idea is fresh and the possibilities are limitless, but you may be worried about what will happen if you need to handle thousands, or even millions, of users.

In reality, unless you already have an established product where you can accurately estimate the scale, overengineering your system out of the gate is a wasted effort at best – and disastrous at worst.

I’ve witnessed a lot of groups dive straight into microservices or complex distributed architectures with many specialized teams involved. Timelines explode, costs skyrocket, features get cut, and product innovation dies. If they ship a product at all, the architecture can be so overcomplicated that nobody understands it, and maintenance and enhancements become a nightmare.

Of course, this isn’t always the case. There are times when it’s worth it to go all in from the start. There’s also the nightmare scenario of going viral, becoming an overnight sensation, and having your system crumble without a quick rescue in sight. However, something like that is exceedingly rare.

A more measured approach with a plan for scalability is the prudent tactic in the vast majority of cases. In this blog, I’ll explain why we suggest a three-stage approach and use a real-world example to explore the different stages.

The 3-Stage Approach

In my experience, the best way to handle a new system design is in three stages:

- A well-designed monolithic system that’s solid but quickly developed and scalable vertically

- A refactor of the system to prepare for the ability to scale horizontally

- Another potential minor refactor and actually scaling your system

By following these stages, you put your product into use much more quickly and cheaply rather than jumping straight to a fully distributed design immediately. In addition, the agility of your team is increased at a crucial time in product development.

The goal of any new software project is to surpass Stage 1, where you simply cannot scale vertically any further (or obvious bottlenecks arise), due to a large volume of users and usage.

But here’s a secret: a Stage 1 monolith can scale very high if your software is built well. If you always keep performance in mind during development, you can make the most out of more limited hardware resources.

A Real-World Example

It’s with this frame of reference that I’ll discuss a system design we’ve implemented that involves the transmission, processing, and ingestion of data.

At a high level, our requirements are pretty simple and can apply to many scenarios.

- Data is sent to an API endpoint with a JSON payload

- The data is processed with our business rules

- The data is persisted to a database (in our case, PostgreSQL)

- The sending party receives a record ID

It’s important to note, there is more going on related to reading account-based data, caching, and matching to client accounts that we’ll ignore.

Stage 1

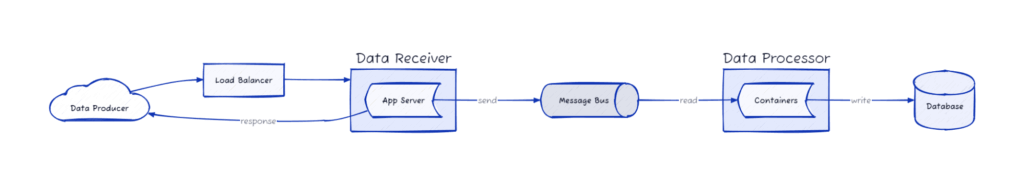

Taking our requirements at face value, and not falling into an overengineering pitfall, our system design looks like the following:

We put the initial effort into developing our Data Receiver and Data Processor, which will insert the transformed data into our database, and then return the ID to the client (Data Producer).

We spent a little extra time optimizing the performance of our receiver/processor so that the setup would last us long enough to get through Stage 2 in the event of moderate success.

In Stage 1, however, the entire system runs on a single Azure Web App and a single PostgreSQL database at a total cost of around $60 a month. This setup easily handled 50,000 transactions per day with plenty of headroom (average 7% utilization) even at a super low compute tier, but it was far from optimal.

The problems are pretty obvious here:

- The data receiver is doing work

- The data processor is doing work

- The database inserts take time

- The client waits for all work to be completed before receiving a response

- Two points of failure

Despite these inefficiencies, responses to the client were still subsecond (average 200ms).

What we gained with this approach:

- Learnings on the actual usage of our product in the wild

- Insights into performance characteristics and bottlenecks

- Projections for future resource scaling

- Reduced complexity and increased speed for initial software development

- Rapid ability to maintain and modify the software

- Dead simple deployment process and maintenance

- Low-cost operation

While we clearly don’t want to live in this setup for very long, starting here provides a lot of advantages in terms of shipping an initial product, cost savings in infrastructure, learnings and insights, and buying us time for Stage 2 as we validate our product.

Stage 2

It’s important to note that we can stay in Stage 1 as long as necessary at minimal cost, and scale up the resources vertically if the demand increases. This is valuable risk mitigation for an unproven product.

Once we’re gaining traction – and increasing product viability – we can begin to work on our Stage 2 refactor.

Our goals for this stage are:

- Split the Data Receiver and Data Processor into separate applications

- Remove the data processing and persistence from impacting the client response

- Rearchitect the infrastructure to become horizontally scalable

- Remove single points of failure

- Deploy to new infrastructure at a small scale

We’ll be introducing system complexity in this stage and also increasing the cost of the infrastructure. A distributed architecture, by nature, requires more individual resources and services to be deployed. Costs can still be managed here by deploying only a minimal amount of resources to fulfill the design.

In my real-world example, this boils down to:

- Introducing a message bus (Azure Service Bus, in our case)

- A load-balanced deployment of Data Receivers

- A containerized deployment of the Data Processors

- High availability PostgreSQL

However, in Stage 2, we don’t have to scale out each of these areas unless demand actually warrants it. It’s more about having laid the groundwork to scale quickly in the event it becomes necessary.

For example, we know we might need one or more read-replicas of our database, and we’re ready to add them in short order, but we’re not going to add them and incur the cost until necessary. If high availability is a priority, you might add one read-replica at this time.

We know the Data Receivers will need to scale out to multiple instances under heavy volume, but we don’t need to add more today. When we do, it’s as simple as starting another instance and adding it to the load balancer.

We also know the Data Processors will need to scale out once a single instance can’t process messages fast enough, but one is fine for today. When we need more, we simply deploy another container instance.

With this “ready to scale” setup, we’ve only increased the cost to power the system from $60 a month to around $100 a month and we can now quickly and easily scale up and down as needed.

Stage 3

If you make it to Stage 3, your product is likely doing very well! You have a boatload of usage and need to accommodate it, so it’s time to scale for real.

Luckily, you’ve set yourself up for success and are not going to be caught off guard. Following the game plan from Stage 2, you can scale the portions of your application that need it in minutes.

You’ll still want to be smart about it, as costs can quickly explode in this stage. Once you’ve dealt with the initial scaling needs, you can then begin to work on auto-scaling systems to add and remove instances based on utilizations to make sure you’re spending those hosting dollars most effectively.

Final Points

While it’s not a one-size-fits-all strategy, taking a staged approach like this can often be an effective way to mitigate risk and save some money. If you have a larger development team, you’d probably separate the service applications sooner to let different groups work in parallel on features, but the same concepts apply.

Too often, teams jump straight to the final system architecture because it’s the “right” way to build it. For startups in particular, this can be a bad use of time as it extends the development process to handle a more complex infrastructure and takes away from time better spent on the product itself. In addition, it racks up hosting fees when the product isn’t really there yet.

The most crucial aspect of new software is shipping, learning, and adoption. Based on what you learn, it could drastically affect the way you design a scalable infrastructure in the future.

If you have other thoughts or experiences, I’d enjoy hearing from you. If you’re looking for a team that can guide you through this process, look no further than the engineering team at Cypress North.

Meet the Author

Matthew Mombrea

Matt is our Chief Technology Officer and one of the founders of our agency. He started Cypress North in 2010 with Greg Finn, and now leads our Buffalo office. As the head of our development team, Matt oversees all of our technical strategy and software and systems design efforts.

With more than 19 years of software engineering experience, Matt has the knowledge and expertise to help our clients find solutions that will solve their problems and help them reach their goals. He is dedicated to doing things the right way and finding the right custom solution for each client, all while accounting for long-term maintainability and technical debt.

Matt is a Buffalo native and graduated from St. Bonaventure University, where he studied computer science.

When he’s not at work, Matt enjoys spending time with his kids and his dog. He also likes to golf, snowboard, and roast coffee.